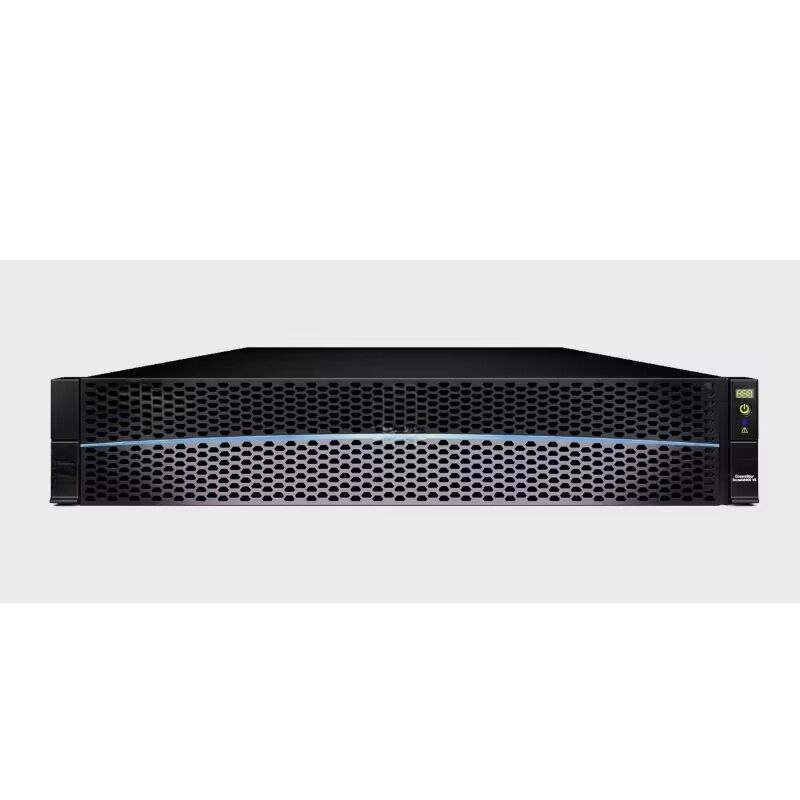

Atlas 800I A2 Inference Server

Best Selling Atlas 800I A2 Inference Server 4U AI GPU Server Ultra-high Computing Power Server

- Overview

- Related Products

- Configuration ≥ 4 pieces of ARM Kunpeng 920 processors

- Each CPU with ≥ 48 physical cores

- Clock frequency ≥ 2.6GHz

- Thermal Design Power (TDP) ≤ 150W

- ≥ 16 DIMMs

- Each DDR4 memory module ≥ 32GB

- Configuration ≥ 2 pieces of 1.92TB SATA SSD hard drives

- Configuration ≥ 4 pieces of 3.84TB SATA SSD hard drives

- ≥ 8 cards

- ≥ 32GB video memory per card

- ≥ 4 fully equipped 25G optical modules

- ≥ 8 fully equipped 200G optical modules

- Server management system supports domestic self-developed management chips

- Supports Chinese BIOS interface

Product description:

The Atlas 800I A2 inference server adopts an 8-module efficient inference method, providing powerful AI inference capabilities. It has advantages in terms of computing power, memory bandwidth, and interconnection capabilities. It can be widely used in the inference of generative large models, such as content generation scenarios like intelligent customer service, copywriting generation, and knowledge accumulation. It supports NPU interconnection to improve the inference efficiency of large models.