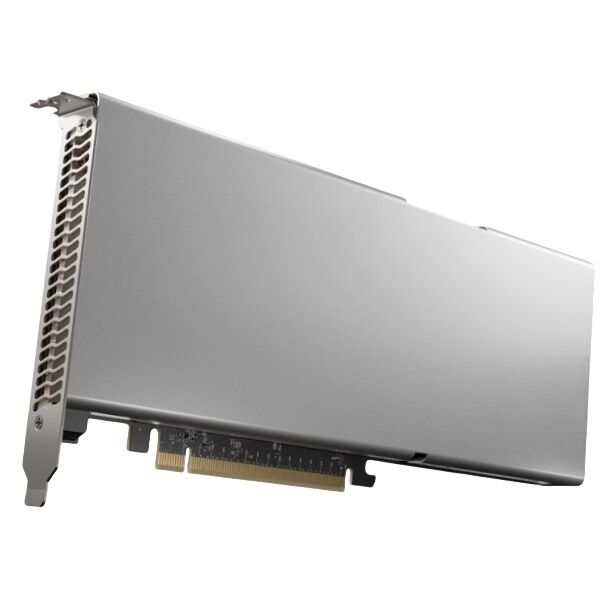

h100 gpu

The NVIDIA H100 GPU represents a groundbreaking advancement in artificial intelligence and high-performance computing. Built on the innovative Hopper architecture, this powerhouse processor delivers unprecedented computational capabilities for the most demanding workloads. The H100 features a remarkable 80 billion transistors and introduces fourth-generation Tensor Cores, enabling exceptional acceleration for AI training and inference tasks. With 16896 CUDA cores and up to 80GB of ultra-fast HBM3 memory, the H100 achieves extraordinary performance metrics, processing complex calculations at speeds previously unattainable. The GPU incorporates revolutionary Transformer Engine technology, specifically optimized for large language models and deep learning applications. Its advanced memory bandwidth of over 3TB/s ensures seamless data processing, while the integrated NVLink interconnect technology enables robust multi-GPU scaling. The H100 also introduces groundbreaking features like confidential computing and enhanced security protocols, making it ideal for enterprise-level deployments. This GPU stands out for its energy efficiency, implementing dynamic power management and advanced cooling solutions to maintain optimal performance while minimizing power consumption.